The main goal of this site is to provide quality tutorials tips courses tools and other resources that allows anyone to learn something new and solve programming challenges. Some people claim that Airflow is cron on steroids but to be more precise Airflow is an open-source ETL tool for planning generating and tracking processes.

Building A Production Level Etl Pipeline Platform Using Apache Airflow By Aakash Pydi Towards Data Science

Apache Airflow programmatically creates schedules and monitors workflows.

. There are a few different. Apache Airflow Basics. I am going to.

It is open-source hence it is free and has a wide range of support as well. BigData-ETL was founded in March 2018 by Paweł Cieśla. Installed Postgres in your Local.

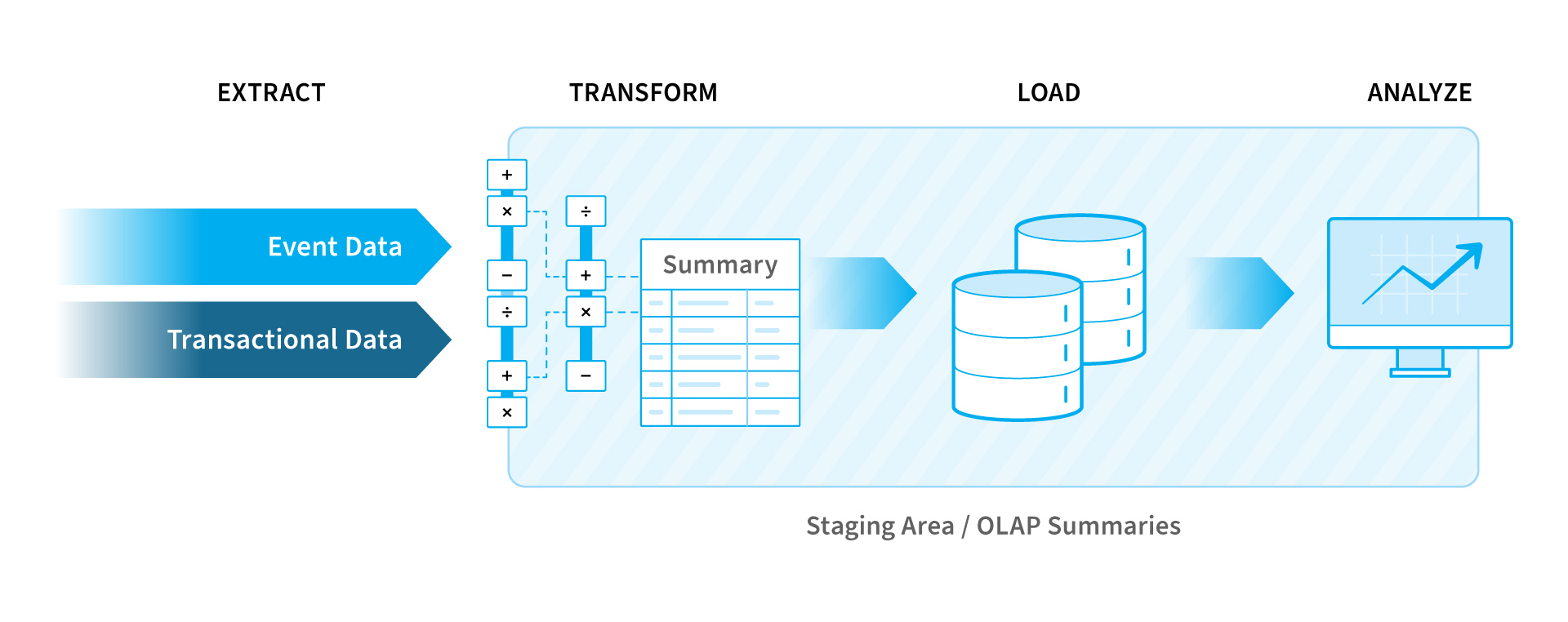

Installed Ubuntu in the Virtual Machine. ETL stands for extract transform and load and is a traditionally accepted way for organizations to combine data from multiple systems into a single database data store data warehouse or data lake. Cloud-based ETL Tools vs.

Tools are further organized into tool categories under Tool Palette and you can simply see all the categories further in this article. Airflow - Python-based platform for running directed acyclic graphs DAGs of tasks. Import file selection in Sql assistant.

As we have seen you can also use Airflow to build ETL and ELT pipelines. About BigData ETL BigData-ETL is a free Online resource site. The production environment we are going to write the ETL pipeline for consists of a GitHub Code repository a DockerHub Image Repository an execution platform such as Kubernetes and an Orchestration tool such as the container-native Kubernetes workflow engine Argo Workflows or Apache Airflow.

A defined unit of work these are called operators in Airflow. ETL can be used to store legacy data oras is more typical todayaggregate data to analyze and drive business decisions. Using Airflow makes the most sense when you perform long ETL jobs or when a project involves multiple steps.

Installed Apache Airflow. A curated list of awesome open source workflow engines. A notable tool for dynamically creating DAGs from the community is dag-factory.

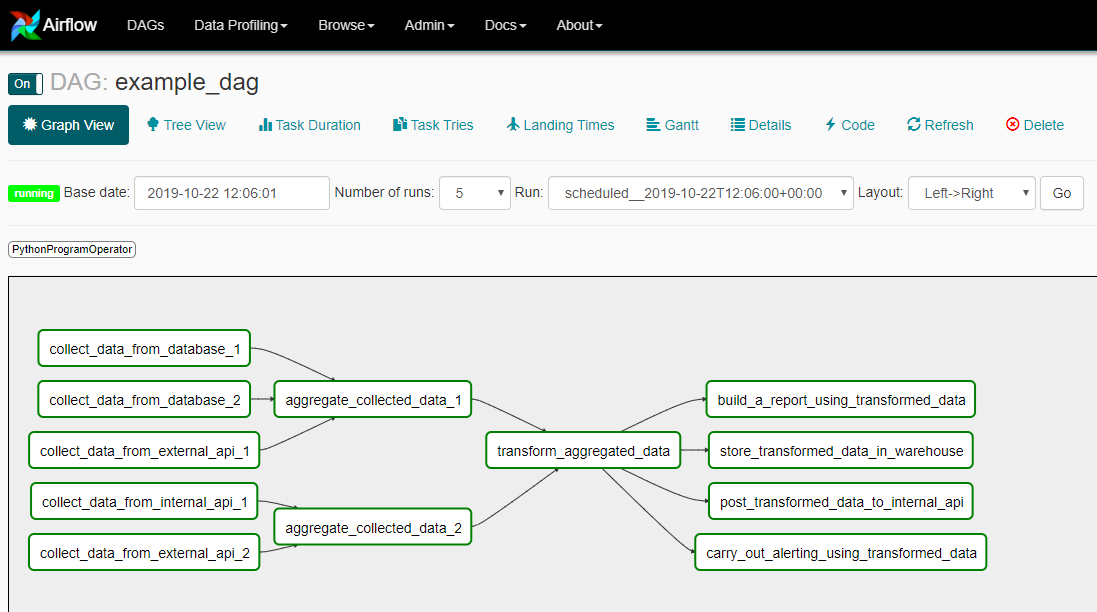

Apache Airflow knowledge is in high demand in the Data Engineering industry. This tutorial is intended for database admins operations professionals and cloud architects interested in taking advantage of the analytical query capabilities of BigQuery and the batch. Airflow represents workflows as Directed Acyclic Graphs or DAGs.

Astronomer makes it possible to run Airflow on Kubernetes. Data can be transformed as an action in the. In this article I am going to discuss Apache Airflow a workflow management system developed by Airbnb.

Apache Airflow is a tool that can create organize and monitor workflows. They shared practical solutions for the challenges t. To make sure that each task of your data pipeline will get executed in the correct order and each task gets the required resources Apache Airflow is the best open-source tool to schedule and monitor.

But so do many of the cloud-based tools on the market. You can restart from any point within the ETL process. Is a tool for authoring scheduling and monitoring workflows as directed acyclic graphs of tasks.

This tutorial demonstrates how to use Dataflow to extract transform and load ETL data from an online transaction processing OLTP relational database into BigQuery for analysis. It can also modify the scheduler to run the jobs as and when required. Moving forward you can simply select any tool and drag it to the workflow canvas to.

It is one of the most trusted platforms that is used for orchestrating workflows and is widely used and recommended by top data engineers. This tool provides many features like a proper visualization of the data. Visit the official site from here.

Tool Palette is the place where you can find all the tools along with their names and images to recognize them. Step 6 Then the importing will begin in the sql assistant. Since you have to deploy it Airflow is not an optimal choice for small ETL jobs.

DAG is a topological representation that explains the way data flows within a system. When we talk about ETL tools we mean full-blown ETL solutions. 28 Apache Airflow.

Whether you are Data Scientist Data Engineer or Software Engineer you will definitely find this tool useful. It is compatible with cloud providers such as GCP Azure and AWS. An individual run of a single taskTask instances also have an indicative state which could be running success failed skipped up for retry etc.

Choosing the right ETL tool is a critical component of your overall data warehouse structure. Dag-factory is an open source Python library for dynamically generating Airflow DAGs from YAML files. Airflow Airbyte and dbt are three open-source projects with a different focus but lots of overlapping features.

Originally Airflow is a workflow management tool Airbyte a data integration EL steps tool and dbt is a transformation T step tool. So Apache Airflow and Luigi certainly qualify as tools. Apache Airflow is in a premature status and it is supported by Apache Software Foundation ASF.

Essentially this means workflows are represented by a set of tasks and. Azkaban - Batch workflow job scheduler created at LinkedIn to run Hadoop jobs. Shopify engineering shared its experience in the companys blog post on how to scale and optimize Apache Airflow for running ML and data workflows.

To use dag-factory you can install the package in. Open Source ETL Tools. Argo Workflows - Open source container-native workflow engine for getting work done on Kubernetes.

Apache Airflow is one such tool that can be very helpful for you. Here we are selecting the Student_Infocsv file to import the excel data into Teradata table. However Airflow is not a library.

Installed Packages if you are using the latest version of Airflow pip3 install apache-airflow-providers-postgres First generate a DAG file within the airflowdags folder by using the following command. Step 5 Execute the Insert query by pressing F5 or tool bar option which will ask the location of the Import File to begin the loading. Apache Airflow manages the execution dependencies among jobs in DAG and supports job failures retirements and alerts.

Building on Apache Spark Data Engineering is an all-inclusive data engineering toolset that enables orchestration automation with Apache Airflow advanced pipeline monitoring visual troubleshooting and comprehensive management tools to streamline ETL processes across enterprise analytics teams. Just select the csv file that has the required data. This tool became very popular because it allows modeling workflows in Python code which can be tested retried scheduled and many other features.

You can deep dive into some of these concepts with these clear articles and their examples Data Engineering 101 Getting Started with Apache Airflow.

Bigdata Etl 4 Easy Steps To Setting Up An Etl Data Pipeline From Scratch By Burhanuddin Bhopalwala Towards Data Science

Python Etl Tools Best 8 Options Phone Solutions Python Programming Tutorial

Airflow Etl Key Benefits And Best Practices To Implement It Successfully N Ix

Airflow Etl Key Benefits And Best Practices To Implement It Successfully N Ix

Etl Process And Tools In Data Warehouse Nix United

Https Docs Dagster Io Data Scientist Scientist Engineering

Etl Is Dead Long Live Streams Real Time Streams W Apache Kafka Are You Enthusiastic About Sharing Your Knowledge With Machine Learning Apache Kafka Time News

Airflow Etl Key Benefits And Best Practices To Implement It Successfully N Ix

0 comments

Post a Comment